Week in Review for Violent Extremism and Terrorism Analysis: 2023-03-06

Happy Monday everyone, here is your recap for the week of March 6, 2023. Feel free to send my way recommendations for next week.

1) START has Published their Profiles of Individual Radicalization in the United States (PIRUS)

The PIRUS database contains important information about trends in regards to radicalization and mobilization to violence in the US. Some highlights from their most resent publication:

they authors found that in 2021, nearly 50% of U.S. extremist offenders mobilized in less than 12 months after being exposed to extremist views, some mobilized in a few weeks.

From 1970 to 2021 far-right extremists make up the largest ideological group in the database (n=1678), followed by Islamist extremists (n=579). The remainder of the individuals in the data are far-left extremists (n=537) or individuals in the “single-issue” category (n=409), whose beliefs vary.

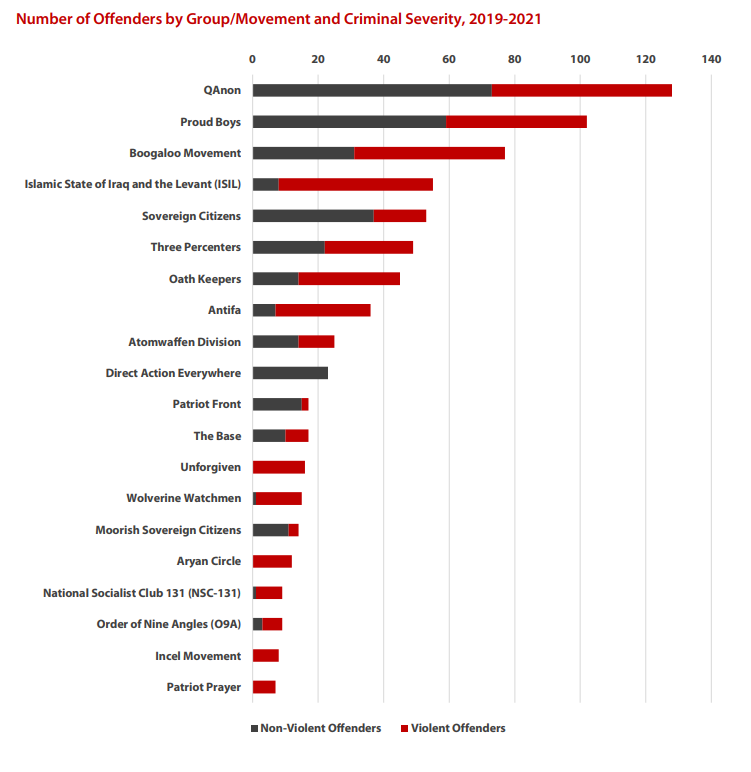

Approximately 10% of the far-right extremists in PIRUS were motivated by recent conspiracy theories. While most extremist movements promote conspiracy theories, over the past five years there has been a pronounced increase in offenders motivated by conspiratorial beliefs tied to QAnon, 5G technology, and the anti-vaccination movement.

The extremist movement. which inspired the most U.S. offenders from 2019-2021, were connected to the QAnon conspiracy theory than any other extremist group or movement.

2) VOX-Pol & ISD are launch the REASSURE report.

This report documents the harms faced by researchers of online terrorism & extremism, how they cope, and why institutions are failing them. I am very happy to see such as project, as this is a topic that I have advocated for professionally and as a researcher in the field.

As the report finds:

Academic research into online extremism and terrorism carries unique risks. The first is repeated exposure to distressing content, such as the detailed analysis of thousands of videos or images produced by ISIS. The second is the potential to be targeted by illicit actors, online and offline, through doxing, trolling or material threats. To-date there has been little guidance for researchers on how to deal with either mental health issues caused by repeatedly viewing violent and offensive material or the challenges of staying virtually and physically safe. There has also been no comprehensive study on what those challenges or risks are.

Some key findings:

Interviewees’ belief in the critical importance and ‘real world’ impact of online extremism and terrorism research;

A third of interviewees did not report harm beyond that of any job;

Two thirds reported some harms, with more than half saying those harms were significant;

Almost half of interviewees had had no awareness of the potential risks of researching in this sub-field before beginning their research;

Nine interviewees reported death threats, some credible;

More than half of interviewees turned to the community of researchers for help when faced with harm(s), feeling that their work was so specialised, only that community could sufficiently understand their experiences;

Approximately a third of interviewees had discussed their research with an ethics board, most of them getting the impression that the board’s priority focus was institutional protection;

Identity mattered with regard to harms, with female researchers and researchers of colour affected by their work, or targeted by extremists, in particular ways;

Junior researchers reported the most harms; in addition, they risked professional harm if, as a protective mechanism, they sought to remove themselves from public spaces (e.g., media appearances, social media).

Unfortunately, few institutions provided adequate formalized training, care, or support for (online) extremism and terrorism researchers as I have highlighted in past blog posts.

3) Message Deletion on Telegram: Affected Data Types and Implications for Computational Analysis

In a recently published study, Kilian Buehling studied the impact of missing/deleted messages on Telegram and how they might affect your analysis & limit your conclusions. The findings revealed that message deletion introduces biases to the computational collection and analysis of Telegram data. Further, message ephemerality reduces dataset consistency, the quality of social network analyses, and the results of computational content analysis methods, such as topic modeling or dictionaries.

4) RAN Reporters: Sicilian P/CVE practitioners work against polarisation and extremism

At the European Center of Studies and Initiatives, practitioners work across Palermo and beyond to prevent polarisation and the rise of extremism, by building a more inclusive society. This report follows them around the city – in community centres, cafes, offices, online – as they work tirelessly with local residents and migrants to build resilience to hate and extremism.

5) The Growing Concern Over Older Far-Right Terrorists: Data from the United Kingdom

David Wells in his latest publication for CTC Sentinel examined recent attacks, disrupted plots, and arrests in Europe and the United States, which have suggested that the terrorist threat posed by older extreme far-right individuals might be increasing. A deep dive into the United Kingdom, which has seen five attacks by extreme far-right men over the age of 47 since June 2016, indicates that most attackers had limited direct connections to the organized extreme far-right, conducted attacks involving limited but rapid planning, and—perhaps as a result—had relatively limited impact in terms of casualties. These attacks coincided with (and likely led to) significant increases in the number of extreme far-right-linked men aged 51 and over being referred to the U.K.’s Prevent program and discussed within its Channel program. The U.K. case study and data raise important policy questions regarding the likely effectiveness of preventing and countering violent extremism (P/CVE) interventions for older demographics in the United Kingdom and elsewhere.

6) Trends in Terrorist Use of the Internet in 2022

Arthur Bradley and Charley Gleeson offered a brief summary of the trends in terrorist use of the internet in 2022, and what we can expect for 2023.

Terrorists were resilient online in 2022 and have continued to adapt their methods to ensure content remains available, such as through targeting of specific technologies and editing of content.

This is particularly through the creation of dedicated websites and servers which host large volumes of terrorist content and often remain online for long periods of time. Current mitigation strategies for domain abuse are failing to effectively counter this threat.

Current crisis response mechanisms also do not adequately support small platforms, despite increased, deliberate targeting of small platforms by terrorists and their supporters to share attacker-produced content such as manifestos and livestreams.

7) Twitter insiders: We can't protect users from trolling under Musk

A BBC investigation finds that the company is no longer able to protect users from trolling, state-co-ordinated disinformation and child sexual exploitation, following lay-offs and changes under owner Elon Musk. Exclusive academic data plus testimony from Twitter users backs up their allegations, suggesting hate is thriving under Mr. Musk's leadership, with trolls emboldened, harassment intensifying and a spike in accounts following misogynistic and abusive profiles.

8) Getting the most out of the Wayback Machine

Craig Silverman offers a quick guide on how to leverage the Wayback Machine for research or investigations that is a must read.

9) The Boogaloo Bois Are Plotting a Bloody Comeback: ‘We Will Go to War’

In her latest piece, Tess Owen dives into how over the last six months, the Boogaloo Bois have returned to Facebook and are using the platform to funnel new recruits (and “OG Bois”) into smaller subgroups, with the goal of coordinating offline meet-ups and training.

10) In Florida, far-right groups look to seize the moment

This NPR piece highlights an important dynamic in the violent extremist and terrorist space: Extremist and terroristic actors want and regularly do leverage average individuals, activists, journalists and researchers to amplify their content and mainstream their narratives.

Large public stunts, metapolitical narratives, exclusive interviews, obvious over the top social media posts are some of the tools that violent extremist and terrorist actors use to have their content amplified in the mainstream by those who would oppose them. As this quote from one of the leaders of a white nationalist group stated: "What we're really going for is people putting it on social media and spreading it around and pushing the conversation in the public arena". Its is important to always think about what you are seeing, why you are seeing it, who is doing this action/activity, why they are doing it and if they are seeking to take advantage of you.